Human decision gates

Human-in-the-loop control requiring user permissions for AI work and verification before high-stakes decisions

Key characteristics

AI should pause for permission before doing restricted actions

Validation scales with risk: simple accept/reject for edits, multi-reviewer approval for critical systems

Some workflows require reviewers uninvolved in AI collaboration to catch biases

About

Computers can’t take responsibility for their actions. If AI makes up information or applies irreversible, unwanted changes, a human is still the one held accountable for trusting it or letting it ship.

That’s why transparency isn’t enough on its own. Beyond showing what the AI did (see labor transparency), the system should give users supervision over the AI’s work and, in high-impact cases, prompt them to review its output before submission.

Human decision should be introduced to

1. Control AI workflow – Authorisation

What AI can and cannot do within the tool should be rigorously configured to prevent unwanted changes and reduce the chance of exposing sensitive data. When AI does need to perform a restricted action, it should pause and either ask the user to perform that action, or ask for approval to do it itself.

For example AI could ask for:

Permission to create or edit files

The user logging in so AI can access a required account

Confirmation to continue working after extensive token use to conserve credits (see usage management)

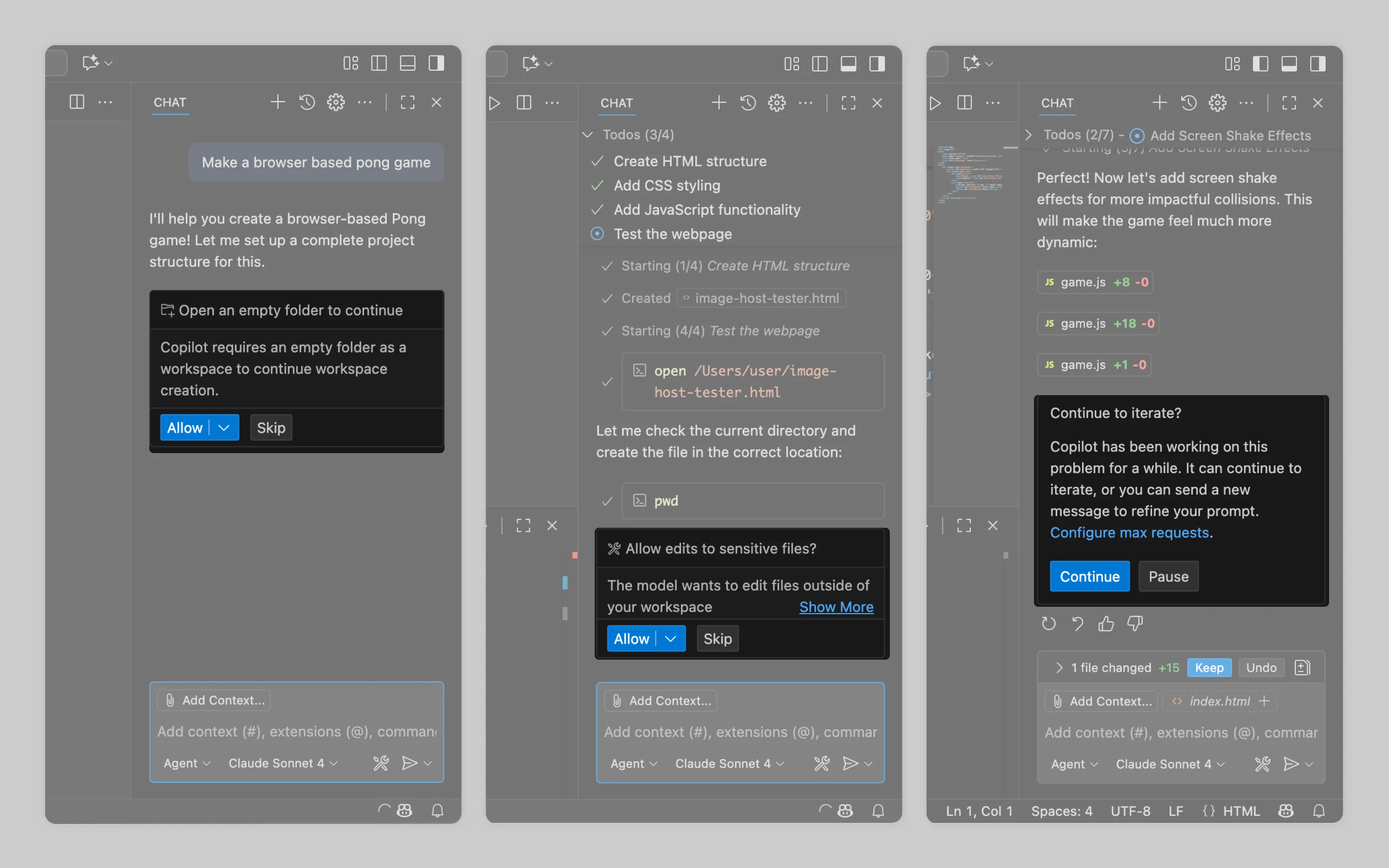

VS Code Copilot regularly prompts users to approve restricted actions.

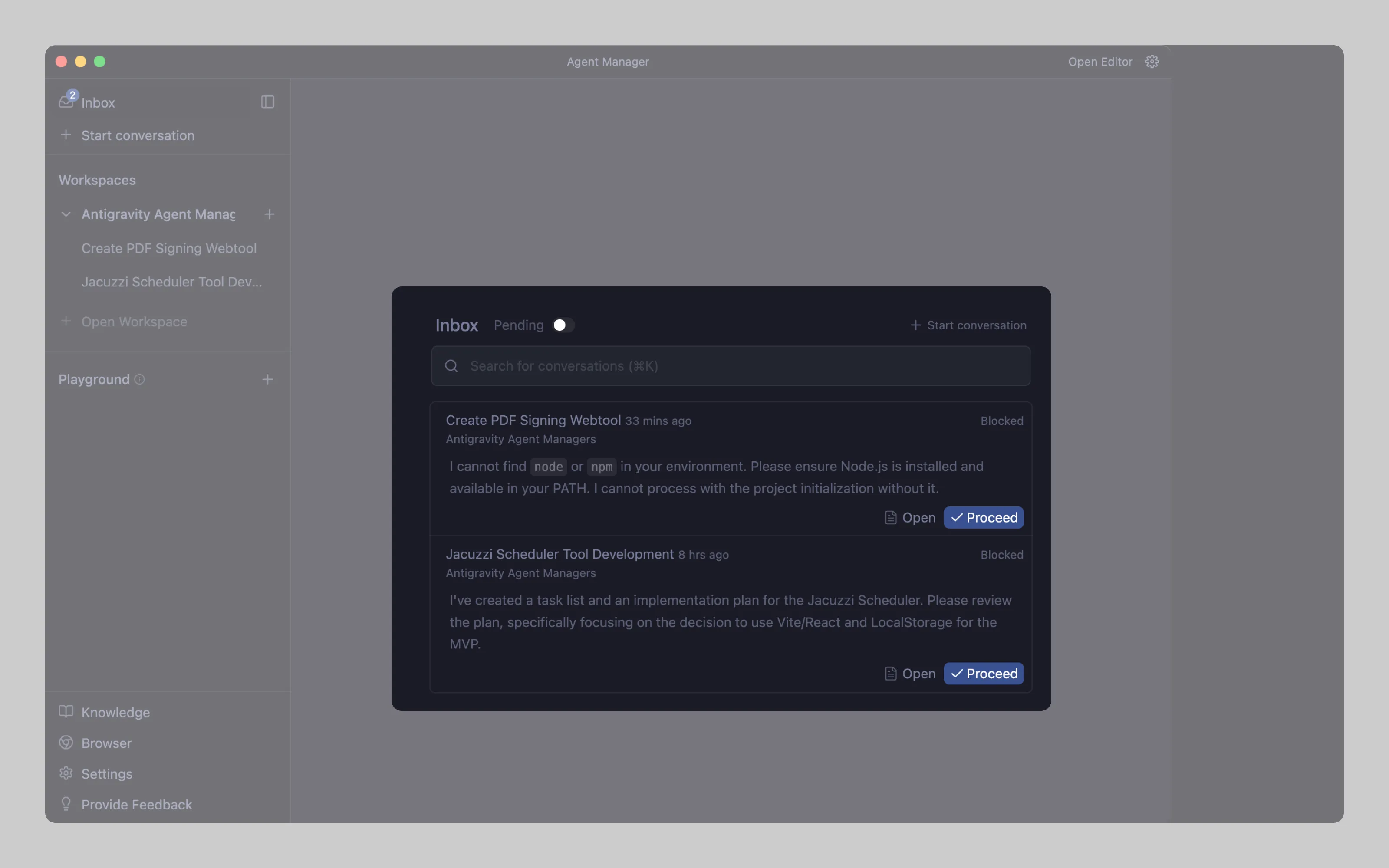

Antigravity takes a proactive approach. During onboarding it presents a setting that define what AI can do autonomously versus what requires human approval.

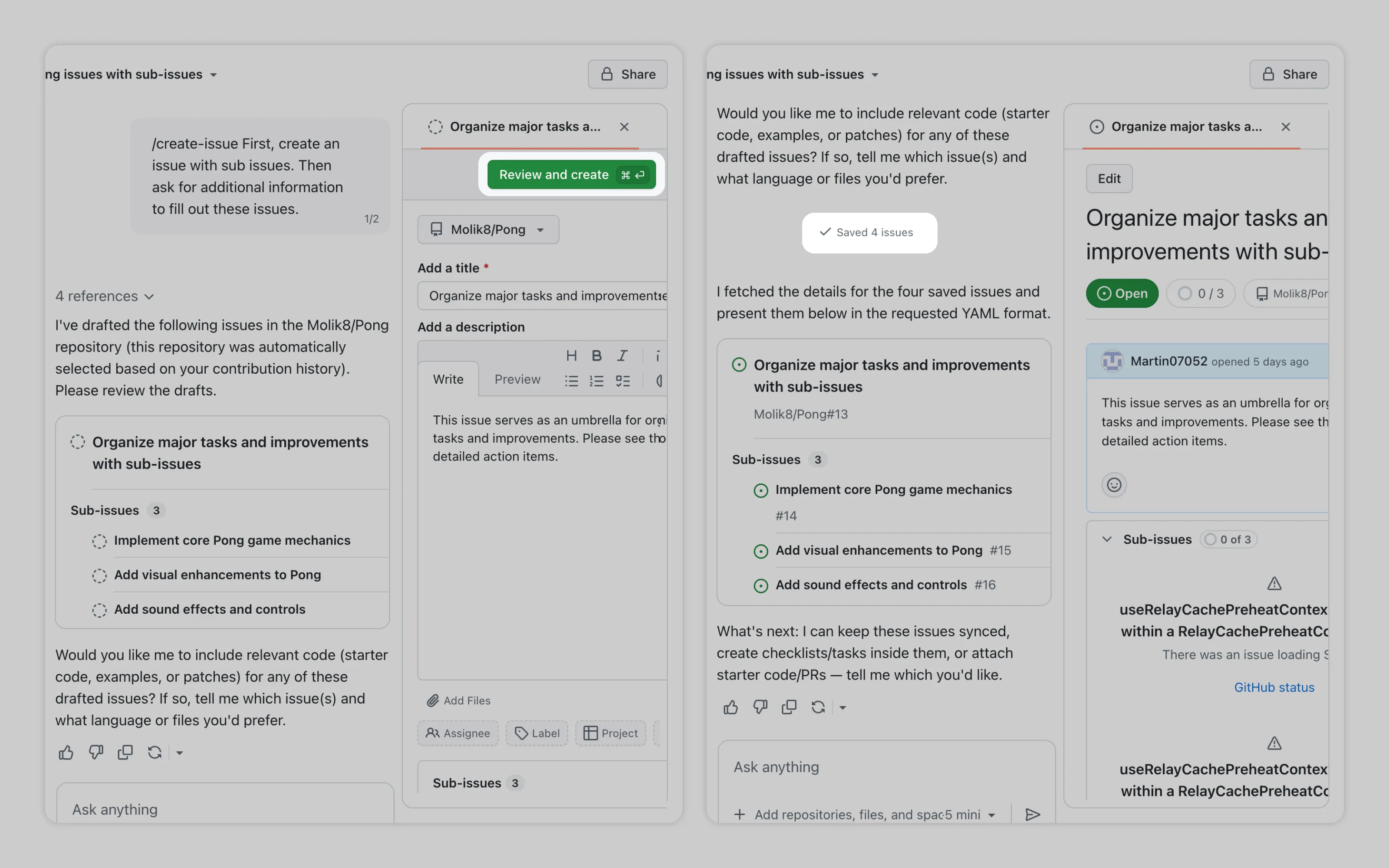

GitHub Copilot pauses after drafting files, requiring user review before creation. Once approved and saved, it confirms the action and continues working.

Antigravity centralizes all pending decisions in its agent manager where users can quickly review and approve queued AI's permission requests.

2. Review synthetic work before submission – Validation

Validation requirements should scale with risk. Low-stakes edits need simple controls, while system-critical outputs will require more rigorous review processes

Simple accept/revert decisions

For routine changes to user work, basic output controls suffice. Make sure to always show what changed and let users accept or reject. (See: Output management)

Review and approve process

High-stakes scenarios require forced review before AI-generated content is submitted. This means blocking submission entirely until user marks output as verified. Some situations might even mandate multiple reviewers, specifically people who weren't involved in the AI collaboration, to catch biases or errors the original user might miss.

Where this type of verification should be present:

Code submissions that could break production systems

Medical, legal, or business reports driving important decisions

Published articles containing factual claims

VS Code implements double verification: when submitting code containing AI-generated portions, a second developer who didn't work with the AI must review before the code can be merged.

Or email us at hello@studiolaminar.com